Editor’s Note: This is the sixth post in our Law in Computation series.

Back in the mid-1990s when I was a graduate student, I “interned” at a parole office as part of my methods training in field research. In my first week, another intern—an undergraduate administration of justice student from a local college—trained me in how to complete pre-release reports for those men and women coming out of prison and entering onto parole supervision. The pre-release report was largely centered on a numeric evaluation of the future parolee’s risks and needs.

The instrument used by the parole office was relatively crude, but it exemplified a trend in criminal justice that pits numbers-based tools, designed to predict and categorize system-involved subjects, against more intuitive judgments of legal actors in the system. Each parolee was to be coded as a 1 (low), 2 (medium), or 3 (high), based on the kinds of risk he or she posed for recidivism, violence, drug use, and so on. The same scale was used to assess the level of special needs he or she may have—things like mental health challenges or inadequate vocational skills—that should be addressed to facilitate parole success. Thus, each parolee was characterized with a two-digit score that was to guide the parole plan.

This moment had the potential to shake my natural skepticism about whether we were in the midst of a true socio-legal transformation, where computationally-based logics were, in the words of socio-legal scholar Jonathan Simon (1988), transforming the individual from “moral agent to actuarial subject.” That was, until the undergraduate trained me in the tool…

“Everyone’s a 2-2,” he instructed me.

“Everyone?,” I asked.

“That’s how I was trained, and that’s how I’m supposed to train you.”

As I came to discover over the many months I spent in this office, this tool and all the other quantitively-based management tools deployed by parole were derided and generally despised by the agents tasked with using them. At best, they were viewed as a poor substitute for their own intuitive judgment and at worst, agents felt the tools hampered their ability to do their jobs.

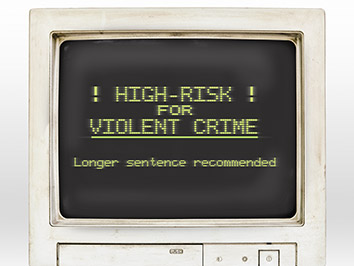

Judgment by algorithm? Image: Shutterstock.

My experience in parole marked the beginning of a very long fascination with how numbers-based techniques are creatively deployed by legal actors in the criminal system. The tools and techniques being developed and implemented today are in a whole other league from the one used by parole in the 1990s. Data scientists are applying sophisticated machine-learning techniques to bail determinations and sentencing decisions, which purportedly reduce risk of human bias while maximizing accuracy in obtaining system-defined optimal outcomes by pivoting away from regression-based forecasting techniques.

The field is also being transformed by the exploding market of algorithmic risk instruments, sold by companies in search of lucrative public contracts (Hannah-Moffat 2018). Proprietary products, like Northpointe’s COMPAS tool are promoted as a solution to inaccuracy and bias in sanctioning, even where they exacerbate such phenomena (these problems also pervade algorithmic policing) . Certainly, science-fiction depictions of technological social control, like The Minority Report, where the state’s gaze–via technology–was unremitting, seem much less far-fetched today than they did even a decade ago.

Yet I remain skeptical that the algorithmic turn in criminal justice is poised to turn judges, parole agents, and other front-line legal actors into “automatons” (Savelsberg 1992) whose professional duties are reduced to plugging in data and imposing the decision output. Indeed, the very notion that the two forces—human, moral agents vs. the decision-making machine— are pitted against each other in a battle for hegemony is, I think, misguided.

If forced to choose sides, I’d choose the humans, even though the “machine” has admittedly, evolved at a dizzying speed since Stewart Macaulay (1965) first pondered its effects on legal practice. These inventions are social artifacts, conceived of, put into action, and repurposed for our varied interests, needs, and pleasures. And in the legal sphere, as I have recently argued in my work on quantification in federal sentencing, even if most of the day-to-day business is mundane, routinized, and even dehumanized, the law itself, especially criminal law, remains infused with (at least idealized) values where its imposition often expresses a whole range of shared human emotion. Legal institutions are ripe sites of the dynamic power relations that constitute social life, so may be especially resistant to being subsumed by technology.

Criminal justice by AI? Image: Shutterstock.

But we don’t have to choose. Taking the lesson of my brilliant colleague, Bill Maurer, who has utterly mastered the socio-legal world of finance, from “finger counting money” to high-tech cryptocurrency, we should leap in with excitement and a headful of questions about the multi-directional relationships between technology, law, and society to understand whether, how, why, and under what conditions our socio-legal-technological worlds are transforming.

This is precisely what we are endeavoring to do at UC Irvine’s Technology, Law and Society Institute. We have spent the past year engaged in a number of fascinating conversations, where this novice (me) has vacillated between my confident skepticism about technology’s effects and quivering anxiety, asking my colleagues whether robots and other AI machines might really become our superiors in some not-so-distant dystopian future.

Our goal over the year was to begin to map out a research agenda—what we know, what we need to find out, and what is intellectually exciting and important at the intersection of law, society and technology (primarily computational technology). Part of that process has included planning a Summer Institute for scholars grappling with similar kinds of questions, so that we can expand the conversation. In just a few weeks, that Institute will come to fruition, as several dozen scholars from around the world and trained in a multitude of disciplines will arrive on campus. We can’t wait!

References

Hannah-Moffat, Kelly

2018 Algorithmic Risk Governance: Big Data Analytics, Race and Information Activism in Criminal Justice Debates. Theoretical Criminology. OnlineFirst: http://journals.sagepub.com/doi/abs/10.1177/1362480618763582

Macaulay, Stewart

1965 Private Legislation and the Duty to Read: Business Run by IBM Machine, the Law of Contracts and Credit Cards. Vanderbilt Law Review 19: 1051-1121.

Savelsberg, Joachim

1992 Law That Does Not Fit Society: Sentencing Guidelines as a Neoclassical Reaction to the Dilemmas of Substantivized Law. American Journal of Sociology 97: 1346-1381.

Simon, Jonathan

1988 The Ideological Effects of Actuarial Practices. Law and Society Review 22: 801-830.